Integrating Human Expertise With AI Content Generation

In 2026, the digital landscape is no longer about if you use AI, but how you control it. We have seen the flood of generic, machine-generated articles that hit the web between 2023 and 2024, and we witnessed the swift penalties Google deployed to clean it up. For businesses in India and beyond, the path forward isn’t abandoning automation—it is mastering the art of integrating human expertise with ai content generation.

As an SEO consultant who has navigated these shifts alongside business owners, I can tell you that the “set it and forget it” mentality is a fast track to irrelevance. The winners in today’s search results are those who treat AI not as a writer, but as a powerful junior analyst that requires strict senior oversight.

This guide breaks down the frameworks, workflows, and standards necessary to build a content engine that ranks high, earns trust, and converts traffic.

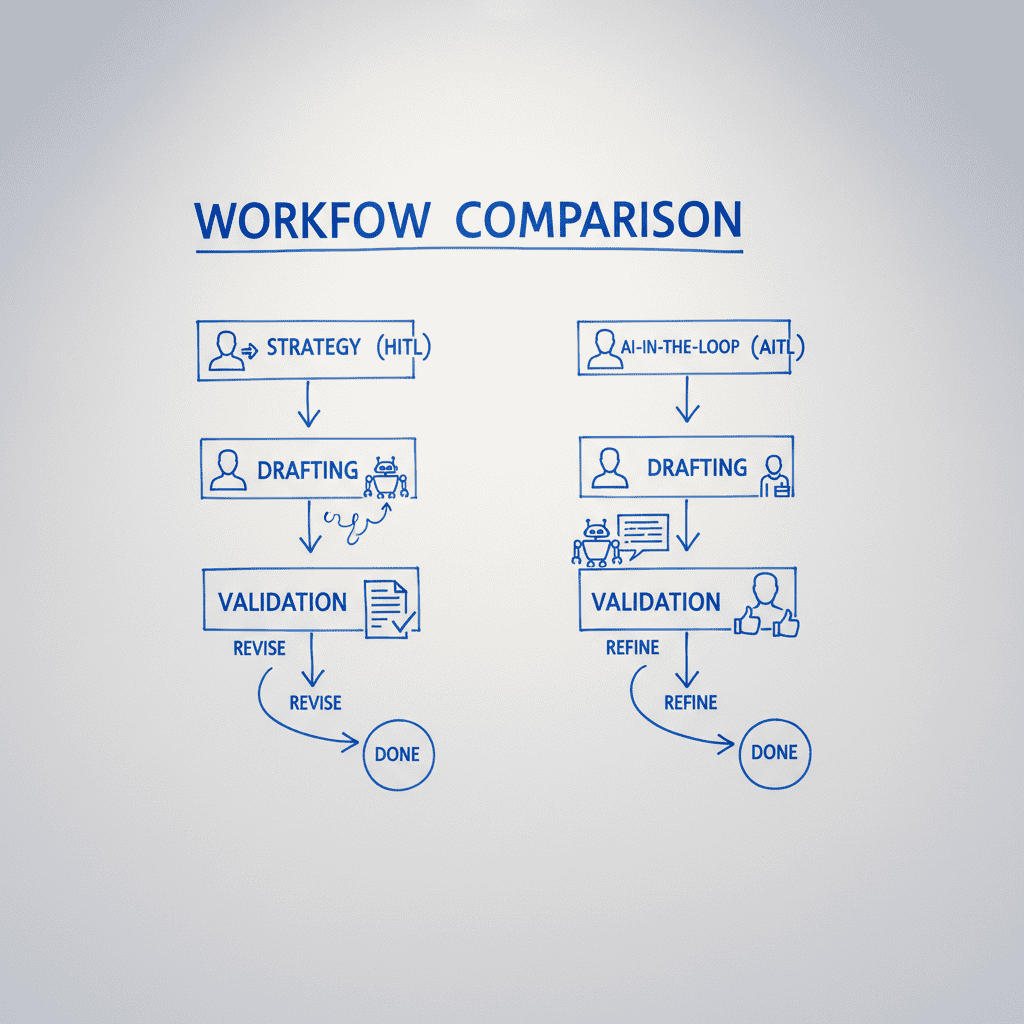

The New Standard: HITL and AITL Frameworks

To understand how we effectively combine machines and minds, we must look at the structural frameworks defined by leading research bodies. It is not enough to simply “edit” AI text; we need a system. The National Institutes of Health (NIH) has outlined specific paradigms that are now the gold standard for high-stakes content industries like Health Economics and Outcomes Research (HEOR).

Defining the Models

There are two primary ways to approach this integration:

- Human-in-the-Loop (HITL): The AI generates the initial output, but a human expert validates, refines, and approves every piece of data before it goes live. This is non-negotiable for YMYL (Your Money, Your Life) topics.

- AI-in-the-Loop (AITL): Humans lead the creative process, drafting the core narrative and strategy, while AI is used for support tasks like checking parameters or suggesting variations.

A 2026 NIH study proposes a hybrid intelligence framework that explicitly uses these paradigms to ensure accuracy in complex reporting. Here is how these frameworks compare in a practical business context:

| Feature | Human-in-the-Loop (HITL) | AI-in-the-Loop (AITL) |

|---|---|---|

| Primary Driver | Artificial Intelligence (Drafting) | Human Expert (Drafting) |

| Human Role | Validator, Editor, Fact-Checker | Strategist, Creator, Decision Maker |

| Best Use Case | Data synthesis, routine reports, summaries | Thought leadership, opinion pieces, brand storytelling |

| Risk Profile | Higher (requires strict oversight) | Lower (human controls the narrative) |

| Efficiency Gain | High (speed in volume) | Moderate (enhancement of quality) |

Why Frameworks Matter for SEO

Using a structured approach like HITL ensures that you are not just generating noise. When we discuss the future of SEO with AI trust signals, we are talking about the ability to prove to search engines that a qualified human has vetted the information. Without a framework, that proof is missing.

E-E-A-T and The Trust Factor

Google’s E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) guidelines are the filter through which all AI content is judged. Since the March 2024 core updates, sites relying on unedited AI content have seen massive de-indexing and ranking drops.

The Role of “Experience”

Generative AI (Gen-AI) operates on probability, not experience. It can predict the next word in a sentence, but it cannot recount a client meeting in Mumbai or the specific challenges of a local supply chain.

To integrate human expertise effectively, you must inject:

• Personal Anecdotes: Real-world examples that AI cannot fabricate.

• Nuanced Opinion: Taking a stance on industry trends rather than just summarizing them.

• First-Party Data: Using your own customer data or case studies.

Integranxt highlights that in publishing, while AI can refine grammar, human editors are essential to preserve narrative depth and audience connection. This human touch is what signals “Experience” to Google’s algorithms.

Operational Workflows: Who Does What?

Implementing this in your business requires clear role delineation. If you leave the lines blurry, your team will over-rely on tools, leading to generic output.

Step-by-Step Hybrid Workflow

Below is a proven workflow I recommend for clients looking to scale content without sacrificing quality:

- Strategy & Ideation (Human Led):

• Define the user intent and unique angle.

• Select the keywords and internal linking opportunities.

• Tool: Competitor analysis tools, Customer interviews. - Research & Drafting (AI Supported):

• Use AI for literature reviews or creating structured outlines.

• Bulmore Consulting notes that AI is excellent for assisting in drafting and outlining, but humans must ensure the narrative aligns with strategic goals.

• Tool: LLMs with RAG (Retrieval Augmented Generation) capabilities. - Validation & Synthesis (Human Critical):

• Verify every statistic and claim. AI hallucinates; humans verify.

• Inject brand voice and specific examples.

• Action: Cross-reference data points with primary sources. - Optimization & Publishing (Hybrid):

• AI checks for technical SEO (meta tags, schema).

• Humans review for readability and emotional resonance.

• Context: This is crucial when building a personal brand with AI, where authenticity is your currency.

Accuracy: The Hallucination Problem

One of the biggest risks in integrating human expertise with AI content generation is the “confident lie.” AI models can sound incredibly convincing while being factually wrong.

The RAG Solution

Retrieval Augmented Generation (RAG) is a technique where the AI is forced to reference a specific set of documents (like your own whitepapers or trusted databases) before generating an answer. This significantly reduces hallucinations compared to using a raw LLM.

Recent advancements are promising. A University of Washington study found that the AI model OpenScholar synthesizes scientific research and cites sources as accurately as human experts. This demonstrates that with the right tools (RAG) and prompts, AI can handle high-level research tasks, provided there is a human verifying the source selection.

Measuring ROI and Efficiency

Many businesses hesitate to adopt a hybrid model because they fear it is too expensive compared to “cheap” AI content or too slow compared to pure human writing. The data suggests otherwise.

Efficiency Metrics

Industry analysis suggests that a well-tuned hybrid workflow can improve content production efficiency by 30-50% while maintaining quality standards. However, this depends on the complexity of the task.

| Metric | Pure Human | Pure AI | Hybrid (Optimized) |

|---|---|---|---|

| Time per Article | 4-6 Hours | 5 Minutes | 1-2 Hours |

| Cost | High | Low | Moderate |

| Accuracy | High | Low/Variable | High |

| Ranking Potential | High | Low (Risk of Penalty) | High |

| Creativity | High | Low | High |

When you are deciding where to allocate your budget, think about the long-term cost of cleanup. Fixing a site hit by a Google penalty costs far more than doing it right the first time. This logic applies whether you are a global enterprise or just choosing between local and national SEO strategies.

Key Takeaways

• Adopt a Framework: Use HITL (Human-in-the-Loop) for accuracy-critical tasks and AITL (AI-in-the-Loop) for creative drafting.

• Prioritize Experience: Inject personal stories and first-hand data to satisfy Google’s E-E-A-T requirements.

• Verify Everything: Even advanced models hallucinate. Human validation is the safety net that protects your brand reputation.

• Use RAG Tools: Ground your AI in specific, trusted data sources to improve citation accuracy and reduce errors.

• Focus on ROI: Hybrid workflows offer the best balance of speed, cost, and sustainable ranking power.

FAQ Section

1. What is the difference between HITL and AITL?

HITL (Human-in-the-Loop) means the AI does the work and a human checks it. AITL (AI-in-the-Loop) means a human does the work and uses AI as an assistant or tool. HITL is better for scaling production, while AITL is better for high-level strategy and creative nuance.

2. Can AI cite sources accurately?

Yes, but it requires specific tools. Recent studies show models like OpenScholar can match human accuracy in citation when using Retrieval Augmented Generation (RAG). However, generic chatbots often invent citations, so human verification is still required.

3. Will Google penalize my content if I use AI?

Google does not penalize content just because it is AI-generated. It penalizes content that is low-quality, inaccurate, or designed solely to manipulate search rankings. Integrating human expertise ensures your content remains helpful and high-quality, avoiding these penalties.

4. How much time does a hybrid workflow save?

Typically, businesses see efficiency gains of 30-50%. While it is not as fast as one-click generation, it avoids the extensive time required to rewrite poor-quality AI drafts or recover from SEO penalties.

5. What role does prompt engineering play?

Prompt engineering is the skill of guiding the AI to produce better initial outputs. By giving the AI specific personas, constraints, and data sources (context), you reduce the amount of editing required by the human expert.

- Managing Crawl Budget for Ecommerce Sites: The 2026 Technical Guide - February 17, 2026

- Auditing Existing Blog Posts for Update Opportunities: A 2026 Guide - February 17, 2026

- Programmatic SEO for SaaS Scalability: The 2026 Guide - February 17, 2026