Structuring Content for LLM Citation: The 2026 Authority Guide

We have entered a new era of search where being “read” by a human is secondary to being “parsed” by a machine. As an SEO consultant analyzing the shift toward Generative Engine Optimization (GEO), I see a fundamental misunderstanding in how businesses approach content today. They are still writing for eyes, while the algorithms are looking for patterns.

Structuring content for llm citation is no longer just about readability; it is about interoperability. If an AI cannot mathematically map the relationship between your header, your claim, and your evidence, it will ignore you. It does not matter how persuasive your prose is if the semantic signal is weak.

In this guide, I will break down the precise structural engineering required to make your content citation-ready for models like GPT-4, Gemini, and Claude. We are moving beyond keywords into the realm of tokenization, semantic hierarchy, and explicit entity signaling.

Key Takeaways

• Semantic Hierarchy is Non-Negotiable: LLMs rely on H1-H3 structures to understand relationship nesting; flat content fails citation tests.

• Schema is the Vocabulary of AI: Explicit markup (JSON-LD) reduces inference errors, directly impacting citation probability.

• Modularity Wins: Standalone sections with clear answers are retrieved more often than long, woven narratives.

• Freshness Signals: A frequently updated article outperforms a static one, even if the static one is older and more established.

The Mechanics of LLM Parsing: Why Structure Matters

To understand how to structure your content, you must first understand how a Large Language Model (LLM) consumes it. Unlike a human reader who scans for narrative flow, an LLM processes text as a sequence of tokens. It looks for statistical probabilities and semantic markers to determine if a specific chunk of text answers a query.

According to the Fibr AI 2026 content optimization guide, LLMs parse content by patterns and signals rather than reading linearly. This means that “walls of text”—long, unbroken paragraphs without structural signposts—cause models to disengage. The model effectively loses the thread of context because the token distance between the entity (the subject) and the predicate (the action/fact) becomes too great.

Tokenization and Context Windows

Every piece of content you write is broken down into tokens (roughly 0.75 words per token). LLMs have finite context windows. If your answer to a specific question is buried inside a 3,000-token narrative without clear boundaries, the retrieval mechanism in a RAG (Retrieval-Augmented Generation) system may fail to isolate it.

I often explain to clients that the future of SEO for professional firms relies on this granularity. You must structure your content so that individual sections can stand alone as “micro-documents” that an AI can extract and cite without needing the rest of the page.

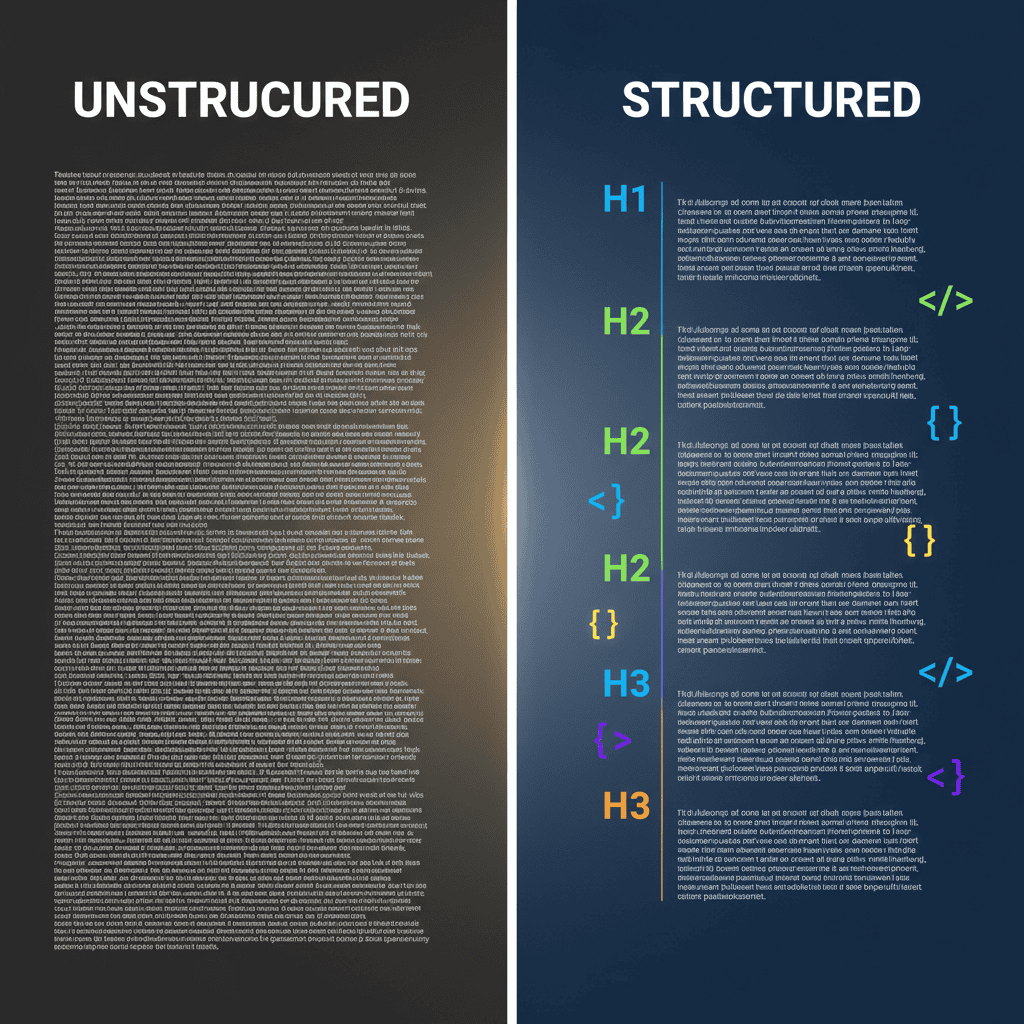

Core Architecture: Semantic HTML and Hierarchy

The most immediate lever you can pull is strictly adhering to semantic HTML standards. This isn’t just code hygiene; it is the map the AI uses to navigate your arguments.

The H-Tag Hierarchy

Your heading structure must explicitly signal the relationship between ideas. I see too many sites using H2s and H3s for aesthetic sizing rather than logical nesting. For an LLM, an H2 signals a major topic, and an H3 signals a child attribute of that topic.

Best Practice Hierarchy:

- H1: The Main Entity (The Article Topic)

- H2: The Sub-Entity or Main Argument

- H3: Specific Attribute, Data Point, or Answer related to the H2

If you break this hierarchy, you break the semantic link. For example, if you jump from an H2 to plain text that introduces a new concept without a header, the LLM has to “guess” if the text belongs to the previous section or starts a new one. Guessing leads to hallucinations or exclusion.

Comparison: Human vs. LLM Reading Patterns

| Feature | Human Reader Experience | LLM Parsing Mechanism | Impact on Citation |

|---|---|---|---|

| Heading Tags | Visual breaks for scanning. | Hard delimiters for topic segmentation. | Critical: Defines extraction boundaries. |

| Paragraph Length | Prefers flow and narrative. | Prefers concise token clusters. | High: Short blocks reduce context drift. |

| Lists (ul/ol) | Easy to skim. | Structured data extraction. | Very High: Lists are prioritized for “Step-by-Step” answers. |

| Bold Text | Emphasis/Tone. | Salience signal (Important Entity). | Moderate: Highlights key entities. |

| Tables | Data comparison. | Structured relational database. | Extreme: The easiest format for LLMs to cite accurately. |

The Data Layer: Schema and Structured Data

While HTML provides the skeleton, Schema.org markup provides the brain. Implementing structured data is essentially speaking the LLM’s native language. It removes the need for the model to “infer” what a string of text means.

When you wrap your content in JSON-LD, you are explicitly telling the model: “This string of text is an Author,” “This string is a Publication Date,” and “This string is a Citation.”

According to the Conversation Design Institute’s 2026 curriculum, structured content is essential for AI performance because it mitigates the risks associated with unstructured information, such as inconsistent outputs. When an LLM encounters a FAQPage schema or Article schema, it gains a confidence boost in the validity of the data.

Implementing Entity Identity

To truly build your personal brand with AI, you must use the SameAs property in your schema to link your content to recognized databases (like Wikidata or Crunchbase). This disambiguates your entities. If you are writing about “Apple” (the fruit) vs. “Apple” (the tech giant), schema is how you prevent the AI from getting confused and hallucinating a citation.

Optimizing for Retrieval-Augmented Generation (RAG)

Most modern search experiences (like Google’s AI Overviews or Perplexity) utilize Retrieval-Augmented Generation (RAG). This system retrieves relevant documents and then generates an answer. To be the document that gets retrieved, your content needs “Retrieval Hooks.”

The Q&A Format

One of the most effective structures is the direct Question-Answer pair. LLMs are trained on vast amounts of Q&A data. When you format a section with a clear question as an H2 or H3, followed immediately by a direct, factual answer, you align perfectly with the model’s retrieval intent.

Example of a Citation-Ready Structure:

• Heading (H3): How frequently must content be updated for LLM citation?

• Answer (Paragraph): Content should be refreshed every 6–12 months. Research indicates that a two-year-old article updated five times outperforms a static two-month-old article in citation selection.

This format is irresistible to RAG systems because it requires zero synthesis. The model can simply “lift and shift” the answer.

Scientific Transparency and Methodology

If you are presenting data, you must structure your methodology clearly. The National Center for Biotechnology Information (NCBI/PMC) emphasizes that transparency in methodology and limitations must be disclosed. LLMs are increasingly programmed to prioritize sources that show how they reached a conclusion, treating them as higher-authority nodes in the knowledge graph.

Contextual Nuance: Local vs. Global Signals

Structuring content isn’t a “one size fits all” operation. The structure must match the intent scope. For instance, the way you structure data for a hyper-local service differs from a national informational guide.

When analyzing local SEO vs national SEO, we see that local content requires heavy use of LocalBusiness schema and geographic coordinate markers within the text. National content requires broader entity linking. If you structure a local service page like a broad Wikipedia article, the LLM may fail to cite you for “near me” queries because the geographic constraints aren’t structurally explicit.

Freshness and Maintenance Signals

Finally, structure applies to time. LLMs have a bias toward “current” truth. A perfectly structured article from 2021 is often discarded in favor of a moderately structured article from 2026.

Maintenance Protocol for Citation:

- Last Reviewed Date: Explicitly state when the content was last verified.

- Version History: Briefly note what changed (e.g., “Updated Jan 2026 to reflect EU AI Act compliance”).

- Dynamic Data: Use tables for statistics so they can be easily updated without rewriting the narrative flow.

According to 2026 LLM content research, content sections must provide immediate answers and clear understanding. If your data is stale, the immediate answer is “incorrect,” and the model will penalize your domain’s authority score.

FAQ: Common Questions on Structuring for AI

How does semantic HTML affect LLM citation?

Semantic tags like <article>, <section>, and <aside> define the scope of content. They tell the LLM which parts of the page are the core content versus navigation or ads, significantly improving extraction accuracy.

What is the optimal length for a content section?

Ideally, a section should be between 200–300 tokens (roughly 150–250 words). This fits comfortably within most retrieval chunks, ensuring the full context is captured without exceeding the attention span of the retrieval mechanism.

Does Schema markup guarantee citation?

No, but it increases probability. Schema provides the “confidence score” an LLM needs to verify that the text it parsed is actually what it thinks it is. It moves data from “probable” to “verified.”

How do I handle conflicting information?

If your content contradicts the consensus (the LLM’s training data), you must structure it with high-authority citations and explicit reasoning (e.g., “Contrary to popular belief X, new data from Y suggests Z…”). This signals to the model that the deviation is intentional and data-backed, not an error.

Conclusion

Structuring content for LLM citation is the discipline of making your knowledge machine-readable. It requires shifting your mindset from “persuading a human” to “equipping a model.” By enforcing strict semantic hierarchy, leveraging schema as a second language, and maintaining rigorous freshness, you position your content to be the authoritative source that AI systems rely on.

The future belongs to the structured. Start treating your content like a database, and the citations will follow.

- Managing Crawl Budget for Ecommerce Sites: The 2026 Technical Guide - February 17, 2026

- Auditing Existing Blog Posts for Update Opportunities: A 2026 Guide - February 17, 2026

- Programmatic SEO for SaaS Scalability: The 2026 Guide - February 17, 2026