JavaScript Rendering Audit Checklist: The 2026 Technical Guide

If you assume Googlebot sees exactly what you see in your Chrome browser the moment you hit “publish,” you are gambling with your organic visibility. In 2026, JavaScript is the backbone of the modern web, but it remains one of the biggest hurdles for search crawlers. I have seen countless beautiful, functional Single Page Applications (SPAs) completely invisible to search engines simply because the execution logic failed the crawler test.

This isn’t just about “making it work.” It is about ensuring your content is indexed, ranked, and served to users without delay. That is why a rigorous JavaScript rendering audit checklist is non-negotiable for developers and SEOs alike. When we look at the future of SEO for professional firms, technical accessibility is the bedrock of trust signals. If the machine cannot read your code, the human never sees your content.

The Core Mechanics: How Googlebot Actually Renders JS

Before we dive into the checklist, we must understand the mechanism. Googlebot does not browse; it crawls, queues, and renders. While Google has improved significantly, the “rendering queue” is real. When Googlebot hits a URL, it first grabs the initial HTML. If that HTML is empty because it relies on client-side JavaScript to populate the DOM, the crawler has to place that URL into a rendering queue.

This delay can range from minutes to weeks depending on your site’s authority and crawl budget. The WHATWG HTML Living Standard defines the event loop that browsers (and Googlebot’s renderer) use, but Googlebot has a timeout. If your script takes too long to execute or consumes too many resources, the renderer cuts it off. The result? Partial indexing or a blank page.

Rendering Strategies Compared

To audit effectively, you must first identify what you are dealing with. Here is how the major rendering strategies stack up for SEO:

| Rendering Strategy | Mechanism | SEO Risk Level | Best Use Case |

|---|---|---|---|

| Server-Side Rendering (SSR) | HTML is generated on the server and sent ready-to-read. | Low | Content-heavy sites, News, E-commerce. |

| Client-Side Rendering (CSR) | Browser executes JS to build the DOM. Initial HTML is often empty. | High | Dashboards, gated apps, small portfolios. |

| Static Site Generation (SSG) | HTML is pre-built at build time. | Lowest | Blogs, documentation, marketing pages. |

| Dynamic Rendering | Server detects bots and serves static HTML; users get JS. | Medium | Legacy sites transitioning to SSR (Google treats as a workaround). |

1. The Technical Audit Checklist (Step-by-Step)

This checklist is designed to strip away the guesswork. We will move from basic visibility to advanced performance metrics.

Step 1: Verify Initial vs. Rendered HTML

The most critical check is the “diff” between your source code and the rendered DOM. If your core content (headings, body text, links) exists only in the rendered DOM, you are relying entirely on Google’s ability to execute your JavaScript perfectly.

How to check:

1. Right-click your page and select View Source. Look for your key content.

2. Right-click and select Inspect Element (DevTools) to see the Rendered DOM.

3. Compare the two. If the Source is empty but the DOM is full, you are using Client-Side Rendering.

Tools like Screaming Frog (in rendering mode) can automate this for thousands of pages, highlighting JavaScript-specific auditing techniques that reveal content gaps.

Step 2: Google Search Console Inspection

Never trust your own browser alone. You need to see through Google’s eyes. The URL Inspection Tool in Google Search Console is your source of truth here.

The Process:

• Enter your URL in GSC.

• Click “Test Live URL”.

• Click “View Tested Page” > “Screenshot” and “HTML”.

If the screenshot is blank or missing elements, or if the HTML tab cuts off halfway through your content, Googlebot is timing out or hitting an error. Whether you are debating local SEO vs national SEO, the technical foundation remains the same: if Google can’t render it, you don’t rank.

Step 3: Check for Blocked Resources

A common, self-inflicted wound is blocking JavaScript files in robots.txt. Years ago, SEOs blocked .js files to save crawl budget. Today, that is fatal. If Googlebot cannot load your script, it cannot render the page.

Audit Actions:

• Check robots.txt for Disallow: /*.js or similar rules.

• Ensure essential APIs delivering content are not blocked.

• Verify that external scripts (CDNs) are accessible.

Step 4: Validate Mobile Rendering

Google uses mobile-first indexing. If your JavaScript renders perfectly on desktop but fails on mobile (perhaps due to a heavy script that mobile processors choke on), you will lose rankings. The Mobile-Friendly Test is your go-to here. It simulates a mid-range mobile device, which often struggles with heavy JS payloads that a developer’s MacBook Pro handles easily.

formance & Core Web Vitals Integration

JavaScript is the primary culprit for poor Core Web Vitals, specifically Largest Contentful Paint (LCP) and Interaction to Next Paint (INP). Heavy execution blocks the main thread, delaying the point at which the user (and Google) perceives the page as loaded.

Benchmarks for 2026:

| Metric | Threshold | JS Impact |

|---|---|---|

| LCP (Largest Contentful Paint) | < 2.5 seconds | Heavy JS delays the render tree, pushing LCP back. |

| INP (Interaction to Next Paint) | < 200 ms | Long tasks block input responses. |

| Total Blocking Time (TBT) | < 200 ms | Measures time the main thread is blocked by JS. |

According to technical crawlability factors, optimizing script load order—deferring non-critical JS and inlining critical CSS—is essential to passing these thresholds.

3. Advanced Diagnostics & Edge Cases

Structured Data (JSON-LD) Integrity

Many modern sites inject Schema.org markup dynamically using JavaScript. While Google can read this, it is risky. If the rendering fails, your Rich Results eligibility vanishes.

Best Practice:

• Prefer server-side injected JSON-LD in the <head>.

• If using dynamic injection, use the Rich Results Test to confirm Google sees the code post-render.

Hash Fragments and SPAs

If your Single Page Application uses hash routing (e.g., site.com/#/about), you are in trouble. Google generally ignores everything after the #. You must use the History API (pushState) to create clean URLs (site.com/about) that look like separate pages to the crawler.

Even if you just want to build your personal brand with AI, using a sleek React-based portfolio without proper SEO rendering can leave you invisible to potential clients.

4. Tools of the Trade: A Comparison

You cannot perform a deep audit with manual checks alone. Here is how the industry standard tools compare for JS auditing.

| Tool | Cost | JS Rendering Capability | Best For |

|---|---|---|---|

| Google Search Console | Free | High (Official Googlebot view) | Spot-checking individual URLs. |

| Screaming Frog SEO Spider | Paid (Free <500 URLs) | High (Chromium rendering) | Bulk auditing entire sites. |

| Sitebulb | Paid | High (Visual rendering graphs) | Visualizing audit data and hints. |

| Chrome DevTools | Free | High (Local debugging) | diagnosing performance bottlenecks. |

Using standard SEO audit protocols combined with these tools ensures you cover both the macro and micro issues affecting your site.

Key Takeaways

• Default to SSR or SSG: Whenever possible, serve HTML from the server. It is robust, faster, and fail-safe.

• The Queue is Real: Relying on Client-Side Rendering adds a delay to your indexing speed.

• Diff Your HTML: Always compare raw source code vs. rendered DOM to spot missing content.

• Performance is Indexing: Slow JS execution leads to timeouts, which look like empty pages to Googlebot.

• Don’t Block Scripts: Ensure robots.txt allows access to all local and external JS resources needed for rendering.

FAQ Section

1. How does Googlebot render JavaScript-heavy pages in 2026?

Googlebot processes pages in two waves: first, it crawls the initial HTML (HTTP response). If resources allow, it queues the page for rendering (executing JS) to see the full content. This second stage can be delayed.

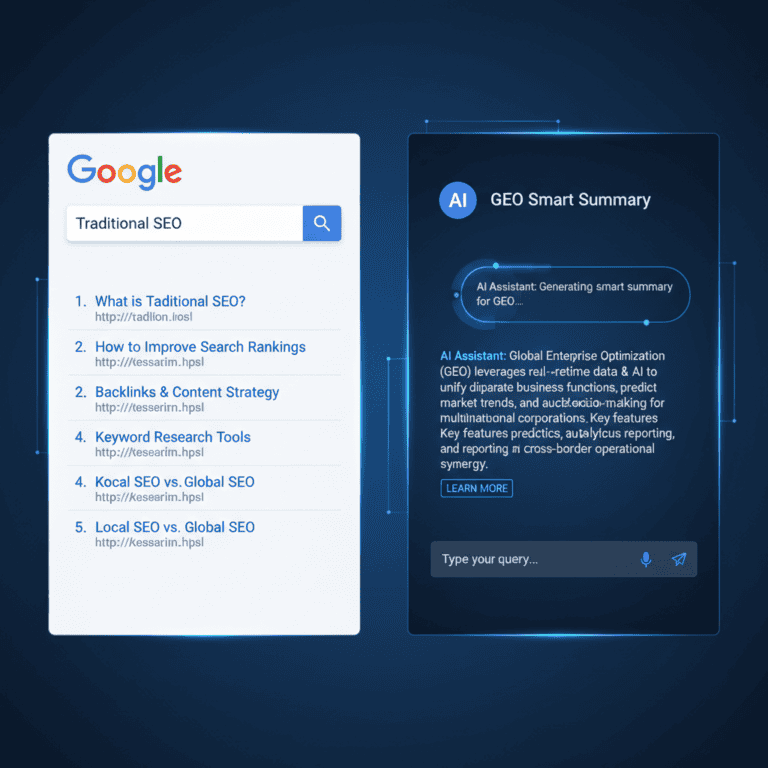

2. What is the difference between client-side and server-side rendering for SEO?

Server-Side Rendering (SSR) sends fully populated HTML to the bot, ensuring immediate indexing. Client-Side Rendering (CSR) sends an empty shell that requires the bot to execute JavaScript to see content, introducing risks of timeouts and indexing delays.

3. Which free Google tools best test JS rendering issues?

The Google Search Console URL Inspection Tool and the Mobile-Friendly Test are the best free, official tools to verify exactly what Googlebot sees.

4. When should I implement SSR over client-side rendering?

You should implement SSR if your content changes frequently, you have a large number of pages (e.g., e-commerce), and SEO is a primary revenue driver. CSR is acceptable for private dashboards or tools where SEO is not a priority.

5. How do I compare initial HTML vs. fully rendered HTML?

You can use the “View Source” (Initial) vs. “Inspect Element” (Rendered) method in your browser, or use Screaming Frog in “JavaScript Rendering” mode to analyze the differences in bulk.

- Managing Crawl Budget for Ecommerce Sites: The 2026 Technical Guide - February 17, 2026

- Auditing Existing Blog Posts for Update Opportunities: A 2026 Guide - February 17, 2026

- Programmatic SEO for SaaS Scalability: The 2026 Guide - February 17, 2026