Strategies For Generative Engine Optimization: The 2026 Guide

Search has fundamentally changed. We are no longer just optimizing for a list of ten blue links; we are optimizing for the single, synthesized answer that sits above them. As an SEO consultant working with businesses in Mumbai and beyond, I have watched the rapid evolution from keyword matching to intent modeling. Today, if you want your brand to survive the shift to AI-first discovery, you must master specific strategies for generative engine optimization (GEO).

In this guide, I will break down exactly how Generative AI (GenAI) engines select sources, the technical frameworks you need to implement, and the content tactics that drive measurable citation visibility.

The Shift From Ranking to Citation

Traditional SEO was about convincing a ranking algorithm that your page deserved to be at the top of a list. GEO is different. It is about convincing a Large Language Model (LLM) that your content is the most authoritative, relevant ingredient for the answer it is baking.

When I analyze the landscape for clients, I see a clear distinction in how these engines process information. It is not enough to just have the keywords on the page. The engine needs to trust the entity behind the content. This connects deeply to the future of SEO for professional firms, where trust signals and verifiable expertise become the primary currency of visibility.

SEO vs. GEO: The Core Differences

To build an effective strategy, we must first understand the mechanism. Here is how the two disciplines compare:

| Feature | Traditional SEO | Generative Engine Optimization (GEO) |

|---|---|---|

| Primary Goal | Rank #1 on a SERP list | Be cited as a source in an AI answer |

| Measurement | Click-Through Rate (CTR), Rankings | Citation Frequency, Share of Model |

| Content Focus | Keywords, Length, Backlinks | Semantic Relevance, Statistics, Authority |

| Technical Key | Core Web Vitals, Mobile Friendliness | Structured Data, llms.txt, Entity Salience |

| User Intent | Navigational / Transactional | Informational with Commercial Undertones |

Core GEO Strategies That Drive Citations

Through rigorous testing and analysis of patent filings, we have identified specific content attributes that LLMs prioritize. It is not magic; it is math. The engines look for high-information-density nodes that support their generated narrative.

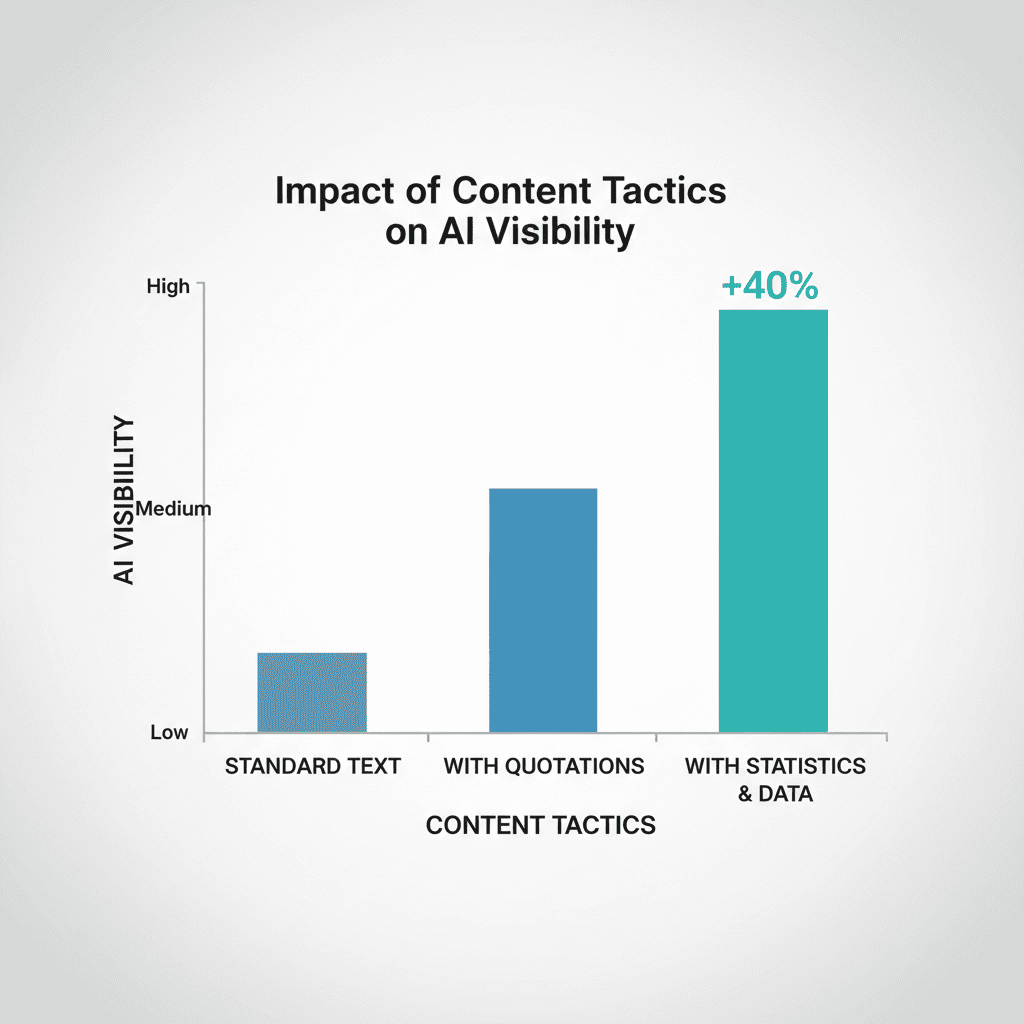

1. The Power of Statistics and Quotations

One of the most powerful levers we have found is the inclusion of hard data. Generative engines crave specificity. They are programmed to hallucinate less when provided with concrete numbers.

According to research published on arXiv, modifying content to include relevant statistics can boost visibility in AI responses by up to 40%. The study specifically highlighted that “Statistics Addition” and “Quotation Addition” significantly improved the Position-Adjusted Word Count of sources.

Actionable Tactic:

• Audit your top 10 performing pages.

• Replace generic qualifiers (e.g., “many people”) with specific percentages (e.g., “68% of users”).

• Cite primary sources to lend credibility to your claims.

2. Structuring for Machine Readability

LLMs consume structure before they consume nuance. If your content is a wall of text, the model struggles to parse the hierarchy of information. We have seen that restructuring technical documentation with clear headings, short summaries, and FAQ schema can drastically improve citation rates.

This is particularly effective when you build your personal brand with AI, as clear structure helps the engine associate your name (the entity) with specific topics (the expertise).

3. The “Direct Answer” Protocol

Generative engines are essentially prediction machines trying to complete a user’s thought. To be cited, your content must provide the “answer token” they are looking for.

How to implement this:

1. Identify the core question your page answers.

2. Provide a concise, direct answer (40-60 words) immediately after the H2.

3. Follow this with the “Why” and “How” to add depth (Information Gain).

Technical Implementation for AI Crawlers

While content is king, technical infrastructure is the castle. If the AI bots cannot crawl or understand your site, your content strategy is irrelevant.

The Role of llms.txt

Just as robots.txt instructions controlled traditional crawlers, the llms.txt file is emerging as the standard for communicating with AI agents. This file allows site owners to provide specific guidelines to large language models, ensuring that your content is indexed correctly and attributed properly. While adoption is still growing, early implementation signals technical maturity to engines like Perplexity and Claude.

Schema Markup as Your Digital Passport

Structured data is non-negotiable for GEO. It translates your human-readable content into machine-readable entities. According to the PEEC AI strategic framework, a robust GEO strategy requires a four-phase approach that heavily emphasizes technical optimization in the early stages.

Critical Schema Types for GEO:

• Article: Defines the content type and date.

• Person/Organization: Establishes E-E-A-T (Expertise, Authoritativeness, Trustworthiness).

• FAQPage: Directly feeds Q&A formats in AI overviews.

• ItemList: Helps engines parse lists and rankings easily.

Domain-Specific Efficacy Matrices

Not all industries see the same lift from GEO efforts. A “one size fits all” approach is dangerous here. The effectiveness of these strategies varies significantly based on your sector and the nature of the queries.

Based on current observations, here is how efficacy ranges break down:

| Industry | Est. Visibility Gain | Primary Driver | Risk Factor |

|---|---|---|---|

| SaaS / Tech | 30–40% | Documentation Structure, Code Snippets | High competition for technical terms |

| News / Editorial | 8–15% | Freshness, Author Authority | Hallucination of fake news citations |

| E-Commerce | 5–12% | Price Data, Reviews Schema | Product availability data lag |

| Local Services | 10–20% | NAPs Consistency, Local Reviews | Confusion with similar business names |

This variance highlights why you must decide between local SEO vs national SEO approaches early in your campaign. Local businesses need to focus on entity consistency, while national brands must prioritize topical authority and statistical depth.

Measuring What Matters

In traditional SEO, we tracked rankings. In GEO, we must track Presence. The metrics are evolving, but the goal remains the same: revenue impact.

Key Performance Indicators (KPIs) for GEO

- Citation Frequency: How often is your brand mentioned in AI-generated responses for your target keywords?

- Share of Model: Similar to Share of Voice, this measures your visibility relative to competitors within a specific LLM.

- Position-Adjusted Word Count: A metric that values citations appearing earlier in the response higher than those buried at the bottom.

To accurately measure this, you need to ensure your technical GEO requirements include setting up GA4 to segment AI bot traffic. This allows you to differentiate between a human user reading your blog and an AI agent scraping it for citations.

Frequently Asked Questions

What is the difference between GEO and traditional SEO?

Traditional SEO focuses on ranking links on a search results page based on keywords and backlinks. GEO focuses on optimizing content to be synthesized and cited by AI models, prioritizing semantic relevance, structured data, and authority signals.

How often should I refresh content for GEO?

For high-value pages, a quarterly refresh cadence is recommended. AI models prioritize freshness as a proxy for accuracy. However, do not just change the date; update statistics, add new examples, and refine the schema markup.

Does schema markup actually help with AI citations?

Yes, absolutely. Schema markup (JSON-LD) provides the context LLMs need to confidently identify entities and relationships within your content. Without it, the engine has to guess, and engines that guess are less likely to cite you.

Can I optimize for specific engines like ChatGPT or Perplexity?

Yes. Different engines have different biases. For example, Grok relies heavily on real-time data from X (Twitter), while Google’s AI Overview leans on traditional web authority signals. A diversified strategy covers all bases.

Conclusion

The era of keyword stuffing is officially over. Strategies for generative engine optimization require a pivot toward authority, data density, and technical precision. By implementing the frameworks discussed—focusing on statistical evidence, robust schema, and domain-specific tactics—you can secure your place as a trusted source in the AI-driven future.

Start by auditing your most valuable content for “fact density” and ensure your technical foundation is ready for the bots. The first movers in this space are already seeing double-digit visibility gains. Don’t get left behind.

- Managing Crawl Budget for Ecommerce Sites: The 2026 Technical Guide - February 17, 2026

- Auditing Existing Blog Posts for Update Opportunities: A 2026 Guide - February 17, 2026

- Programmatic SEO for SaaS Scalability: The 2026 Guide - February 17, 2026